A Preliminary Study on Augmenting Speech Emotion Recognition Using a Diffusion Model

Interspeech 2023

Ibrahim Malik, Siddique Latif, Raja Jurdak, and Björn Schuller

Abstract

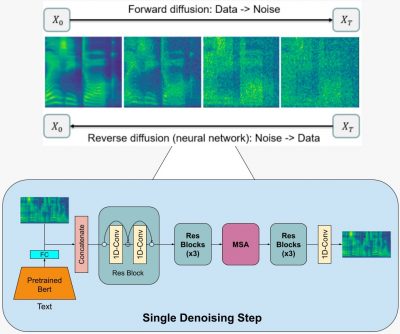

In this paper, we propose to utilise diffusion models for data augmentation in speech emotion recognition (SER). In particular, we present an effective approach to utilise improved denoising diffusion probabilistic models (IDDPM) to generate synthetic emotional data. We condition the IDDPM with the textual embedding from bidirectional encoder representations from transformers (BERT) to generate high-quality synthetic emotional samples in different speakers’ voices. We implement a series of experiments and show that better quality synthetic data helps improve SER performance. We compare results with generative adversarial networks (GANs) and show that the proposed model generates better-quality synthetic samples that can considerably improve the performance of SER when augmented with synthetic data.

Audio Samples

| Original | Synthetic (Proposed Model) | Neutral |

|---|---|---|

| Neutral | ||

| Sad | ||

| Sad | ||

| Happy | ||

| Happy | ||

| Angry | ||

| Angry |